Fifteen minutes on LinkedIn, thousands of likes, and a proud post about an appointment booking app “built in record time” with an end-to-end AI builder. What looked like a success story was also a textbook case of vibe coding security vulnerabilities: the admin username and password, hardcoded in the front end for anyone to see.

The growing adoption of AI in cybersecurity has also been under scrutiny lately when Tea app, a buzzy social platform, made headlines for a massive breach that leaked thousands of selfies, IDs, and over a million private messages. While there is no public evidence Tea used vibe coding, some observers and security experts noted that the incident highlights how rapid AI-driven development can amplify security risks.

The benefits of AI-driven vibe coding are clear, but so are the security vulnerabilities that come with it. This article looks at common risks in both end-to-end AI builders and developer coding assistants – and what can be done to address them without slowing down innovation.

Vibe coding platforms aren’t all the same

Vibe coding removes friction. You describe the outcome and get working software. Non‑engineers can turn a brief into a live app without learning a stack. Engineers trade boilerplate for focus on product logic. One person now ships what used to take a small team. The economics are hard to ignore. Faster delivery, lower cost, more experiments – and many more people who can build things than before.

Speed and access do not make vibe coding inherently risky, but they mean security needs a seat at the table from the start. AI will give you code that runs – it will not decide who should be allowed to see what, or how to keep secrets out of public code bundles. If you do not push for safe defaults, you will not get them.

End-to-end builders vs. developer assistants

There are two broad families that introduce vibe coding security risks in different ways:

End‑to‑end builders (such as Lovable, Base44, and V0) are for people who want a URL today. You type what you want, the platform wires up the UI, data, and hosting. You might never open a repository. That is the appeal, but it is also the risk. If you do not read code, you will not notice hardcoded credentials, missing authorization checks, or a storage bucket that is open to the world. The defaults and publish flow carry most of the security burden.

Developer assistants (such as GitHub Copilot, Cursor, and Claude) are for engineers working directly in code. They are fantastic at moving you from empty file to working function: they produce code quickly, but also bring imports you did not vet, scaffolds you did not design, and patterns that compile but do not necessarily match your trust boundaries.

Builders concentrate risk in invisible defaults, while developers’ assistants spread risk across a larger code surface. In both cases, the model has no sense of your threat model, your data sensitivity, or who should be allowed to do what. When everyone can ship code, ownership of security gets fuzzy.

Understanding these differences helps explain why certain security issues keep showing up in vibe-coded projects, and why they take different forms depending on the tool – a key focus when exploring generative AI security concerns.

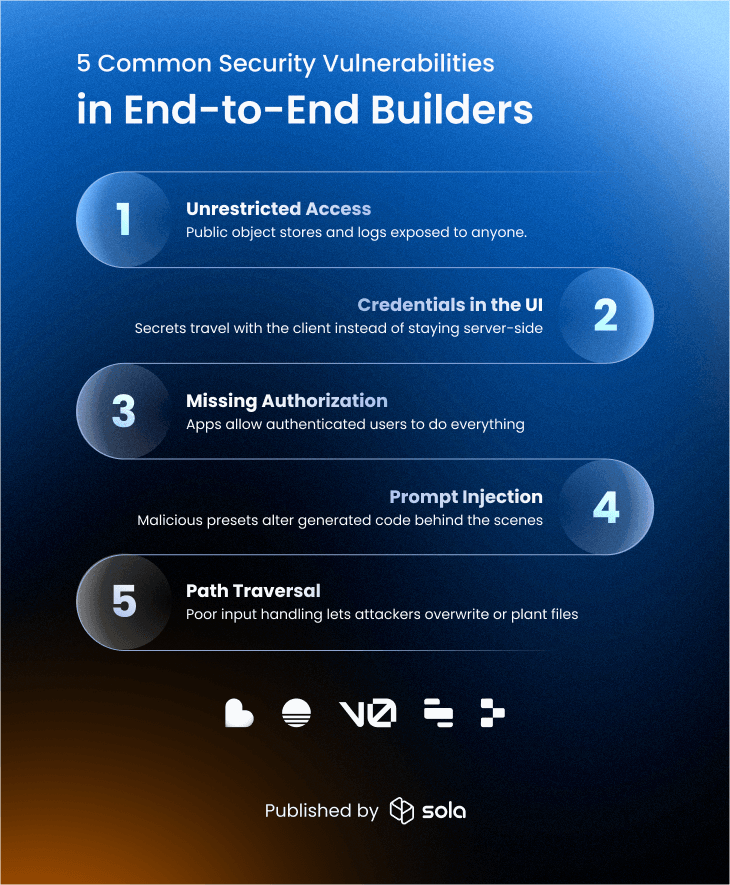

Common vulnerabilities in end-to-end builders

End to end builders are great at hiding complexity. That is the upside and the risk. Many publishers never read the code, so they assume the platform handles security. Here is where things usually break:

Unrestricted access to resources

End to end builders optimize for a working demo. You get public object stores for images, verbose server logs exposed in the client, and helper endpoints that were meant for testing but are now internet facing.

Impact: Easy data leakage, token exposure in logs, and reconnaissance for later attacks.

Credentials and secret management

Prompts say “connect to provider X”, but might not reference how the credentials are stored and fetched securely. Builders make it simple to reference keys, but unless the app enforces a server only boundary and unique storage locations, your secrets might ride along with the UI.

Impact: Live keys reused by others, surprise bills on third party APIs, and access to your cloud or SaaS accounts.

Missing authorization

Login is visible and easy to describe. Authorization is invisible and easy to forget. Many generated apps implement sign in, then treat any authenticated user as allowed to read or write anything – which is how a single tenant app becomes a multi-tenant data leak.

Impact: Unauthorized data exposure and privilege escalation. For example, one user viewing another’s records, and privilege escalation that can turn ordinary accounts into admins.

Prompt injection through platform context

Builders feed on text you provide and presets you import. Copy pasted snippets, shared rules files, or community presets can carry hidden instructions that quietly change what gets generated or even what gets published. The creator thinks they imported a preference file. An attacker sees a control plane that can weaken checks or wire insecure behavior into the app.

Impact: Security controls can be subverted, creating persistent backdoors and enabling data exfiltration through features that appear legitimate.

Path traversal from helper features

A recurring failure is improper input handling that enables injection attacks. One common case is path traversal: an export function writes a report to disk by joining a base folder like tmp/ with the filename a user supplies. Without validation, an attacker can include ../ to climb out of tmp and write elsewhere – for example, public/report.html instead of tmp/report.pdf. This lets them overwrite server files or plant malicious content that a CDN would happily serve.

Impact: Attackers could overwrite or corrupt files, introduce persistent backdoors, serve malicious content to users, or tamper with other tenants’ data.

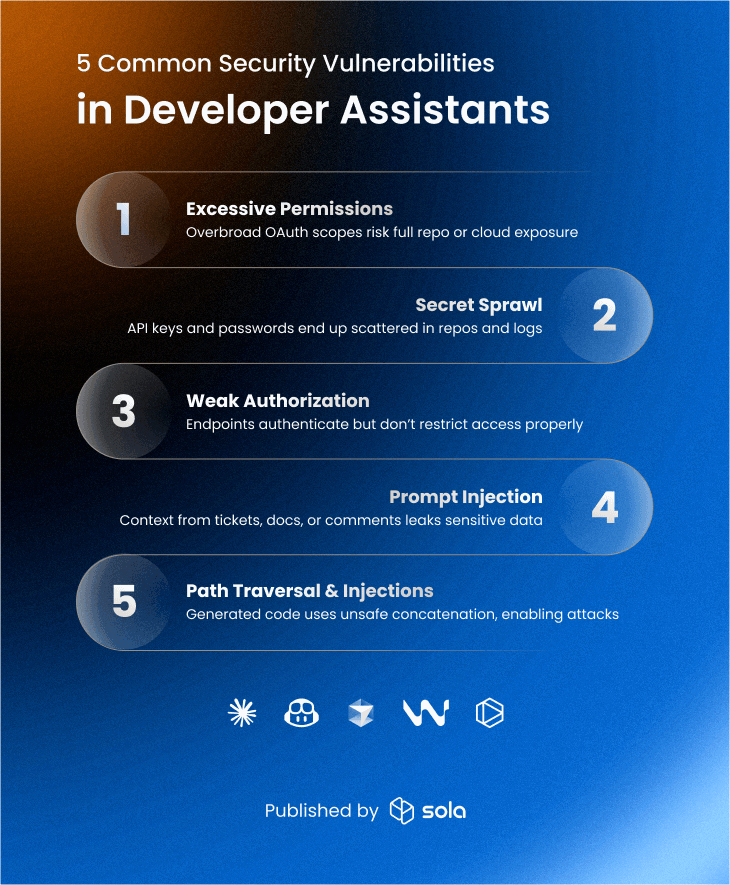

Common vulnerabilities in developers’ coding assistants:

Developer assistants are great at speeding up real engineering work. That is the upside and the risk. They pour out code that compiles, and people tend to trust the autocomplete. Prompts describe what to build, not who may use it or how secrets are handled. The result is a lot of new code that runs while trust boundaries remain undefined.

Over permissions to repos and cloud

Assistants and their plugins want to be helpful right away, so they ask for broad OAuth scopes across your GitHub or cloud accounts. Under deadline pressure, people click Accept and move on. The trial becomes the new normal, and those scopes stick around. Some tools also sync context back to the vendor by design, which means code and docs that were meant to stay inside the org now exist elsewhere.

Impact: If a tool, plugin, or even the vendor is compromised, attackers could access entire repos, cloud resources, or sensitive docs. Even without a direct breach, granting excessive scopes increases the blast radius of account misuse and data leakage.

Credential and secret sprawl

Moving fast invites shortcuts. A developer pastes an API token to keep the tutorial flowing, commits an example .env for the team, or leaves a debug print that logs a password. Assistants happily generate snippets that work without insisting on vaults or secret managers. The result is secrets scattered across repos, commit history, CI logs, and snippet caches where they quietly persist. GitHub API tokens in code are a frequent casualty of this kind of sprawl, often discovered only after attackers have reused them against production services.

Impact: attackers harvest keys from history or logs and reuse them against production services and cloud accounts.

Missing authorization in handlers

Ask for a working endpoint and you will get one. Generated handlers often check only that a user is authenticated, not that they are allowed to access this record or perform this action. This is exactly where enforcing least privilege access matters: every route should allow only the minimal actions required, but vibe-coded apps often skip that step by default.

Impact: data exposure and privilege escalation through ordinary looking requests that pass basic auth checks.

Prompt injection through the dev workflow

Assistants read the surrounding context to be useful. That can include Jira tickets, README files, PR comments, and even code review threads. The tool treats this as part of the task, not as an attack, and follows along.

Impact: source code or secrets exfiltrated as if they were normal assistant output, leaving few obvious signs in application logs.

Path traversal and related input flaws

Autogenerated utilities often build file paths and shell commands with string concatenation. Give that code a user supplied filename and you control where the system reads and writes. Without normalization and strict allowlists, a value like ../public/report.html will step out of the intended directory, overwrite files, or plant content that ends up served to users.

Impact: directory traversal, file overwrite, and command or query injection that turns helper features into entry points.

These aren’t every possible flaw, but they’re the ones that show up most often when apps are built at vibe-coding speed. Bottom line: builders concentrate risk in invisible defaults and publish flows, assistants spread risk across fast-growing code and tooling scope. In both worlds the model does not know your trust boundaries, and when everyone can ship, no one owns the review by default.

Making vibe coding safer without killing the vibe

If vibe coding spreads fast, security needs to keep up without slowing things down. That doesn’t mean formal processes or full-blown security reviews on day one. It means small guardrails that catch common failures early, and scale with the way people actually work.

Start with minimal threat modeling

Not everything needs a risk register. But if you’re creating an app, at least pause to ask: what could go wrong? What’s exposed, or is in danger of leaking? Where could a bad actor sneak through?

Even a scribbled checklist, which includes notes such as “input is sanitized,” “no secrets in logs,” or “admin access is restricted”, would be significantly better than blindly trusting generated code.

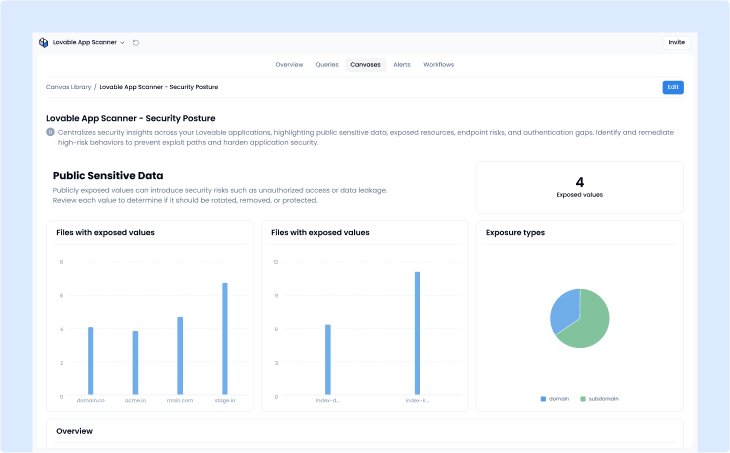

Automate scanning from the start

The biggest mistake? Waiting until the MVP is live. Scanning should run from the first commit, not the first incident. Plenty of code scanners cost too much or require more integration than the average builder can handle – and this is where lightweight scanners like Sola’s Lovable Security Scanner come in: fast checks on common problems, with basically zero setup. The goal is to catch issues as early as possible, before shortcuts harden into security debt.

Enforce safe defaults

Most vibe-coded apps skip the hard parts unless they’re explicitly asked for. So make security the default path:

- Validate all input

- Deny all access by default, then open only what’s needed

- Sanitize file paths, commands, and query strings

- Avoid verbose logs unless you’re actively debugging

- Never hardcode secrets, even for a demo. Use secret managers, even if they feel like overkill. Keep secrets out of

.env.examplefiles, client code, logs, and public repos. Assume that whatever gets pasted once will be copied again. - Keep admin operations private; never expose admin logic to the client-side

- Return only the data required, and avoid sending sensitive information to the client

- Avoid sequential IDs

(/docs/1, /docs/2)that make resource locations easier to guess.

Monitor dependency sprawl

Package suggestions from assistants aren’t curated. They’re often based on what’s common or syntactically similar — not what’s safe. Typosquatted packages have already made it into production through AI coding assistants. At minimum, track what’s in use, pin versions, and alert on vulnerable or high-risk dependencies.

Own the output. AI won’t do it for you

Some platforms are more aware of their users’ security vulnerabilities. Lovable flags bad patterns, Cursor suggests safer handlers – but they’re still reactive. AI doesn’t understand your architecture, your threat model, or your compliance needs. It just writes what you asked for.

Generated code might look polished, but don’t assume it handles access control, input validation, or secret hygiene unless you explicitly prompted for those things. And even then, treat it with suspicion. AI doesn’t know who should access what, or where sensitive data flows. It doesn’t understand risk, but someone has to. Whether it’s a dedicated reviewer, the original author, or just the last person to commit the file – the output needs an owner.

The way forward for vibe coding and security

Vibe coding platforms are getting smarter about security. Some flag risky patterns in real time. Others add extensions and API hooks so scanners can run without breaking the flow. That’s progress – but far from the whole job.

No vibe coding platform monitors security continuously.

Vibe coding platform’s checks are improving, but continuous, cross-stack monitoring is still rare. Platforms don’t watch how issues evolve across repos or flags when a change in one service quietly breaks the posture of another. After you hit Publish, code shifts, dependencies change, and configurations drift. Teams need something that stays with the app long after the demo and can see beyond one tool’s sandbox.

Security teams should know what AI assistants are running inside their environment, what access they have, and what data they can touch or send out. Developers should keep platform security features on, connect repos and cloud so exposure is visible, treat shared configs like untrusted code, and make sure someone owns security reviews for every app.

Security is moving closer to where code is generated, but it also needs visibility across all projects and systems. The winning approach combines platform checks with continuous, stack-wide oversight. Even more importantly, democratized coding needs equally accessible security: security checks that are easy to turn on, run automatically, and fit into the same fast workflows that make vibe coding appealing. That’s where Sola fits, while you keep shipping at vibe-coding speed.

Key takeaways

So, what can you really do about vibe coding security vulnerabilities?

- Catch issues where they start – Run lightweight scanning inside your AI builder so vulnerabilities surface while people are still building. The Sola app for Lovable is designed for that exact moment.

- Keep scanning after the demo – Link GitHub, cloud accounts, and other stack components to get a single view of exposed buckets, overbroad IAM roles, risky packages, and insecure publish settings.

- Stay aware of your AI tools – Track which assistants are in use, what permissions they hold, and what data they can reach or send outside your environment.

- Make ownership explicit – Assign a responsible person for each app’s security reviews.

- Think in layers – Combine platform checks with continuous, stack-wide monitoring.

With Sola, both security teams and developers can keep visibility high, catch the common mistakes vibe-coded apps make, and maintain security without slowing down the speed that makes vibe coding so appealing.

Across platforms, tools and vibe coded apps

FAQ: Vibe Coding Security

What are the most common vibe coding security vulnerabilities?

Sola helps surface these weaknesses early with lightweight scanning built for fast workflows.

How can teams address vibe coding security concerns without slowing innovation?

Add scope/consent reviews for assistants and plugins, pin and verify dependencies, and run scanners from the first commit so the guardrails live inside the developer workflow rather than after the fact.

Why is continuous monitoring critical for vibe coding security?

Ongoing scanning and alerts catch scope creep, newly introduced secrets, vulnerable packages, and permissive resources as they appear, keeping best practices alive long after the MVP ships and turning silent drift into visible, fixable issues.

What are the main vibe coding security challenges with end-to-end builders vs developer assistants?

Because neither model understands your trust boundaries by default, continuous, cross-stack scanning is essential to surface unsafe defaults, excessive permissions, secrets, and dependency issues regardless of how the code or configuration was produced.

What makes vibe coding security risks different from traditional software development?

Unlike traditional reviews that assume stable pipelines and clear code ownership, vibe coding risk often stems from unsafe defaults and uncertain ownership, so treat defaults as untrusted, make a single owner accountable for review, and remember that “authenticated” ≠ “authorized” by enforcing resource-level checks rather than relying on login alone.

Chief Information Security Officer, Sola Security

Yoni has spent the past decade leading security engineering at companies like Meta (formerly Facebook) and AppsFlyer, and now brings his sharp eye and steady hand to Sola as CISO. Known for phishing drills so sneaky they make the real attackers take notes, he stays chill even when everyone else is refreshing dashboards and reaching for incident snacks.