The adoption of AI for cybersecurity is in full swing. On one hand, every product seems to have “AI-powered” stamped on it, whether it means a genuine re-think or just a small upgrade under the hood. At the same time, it has never been easier to spin up your own GPT-style helper, wire in a few APIs, and call it a day. We’re in the era where building your own tool feels almost too easy.

That ease is why so many teams are experimenting. GPTs can draft code or spit out answers fast, but they do not know your environment. MCPs bring structure by plugging into real APIs, but they come with their own upkeep and blind spots.

This article looks at that middle ground: how GPTs and MCPs help, where they fall short, and what it takes to move toward an AI-native cybersecurity solution that does more than just look smart on paper.

TL;DR

- GPTs answer fast, but for your own security concerns, they do not know your environment, so they guess and skip controls. Good for ideas, not for owning risk.

- MCPs help by letting the model pull real facts from your systems, so basic security questions can be grounded in reality rather than guesses.

- However, at scale, MCPs struggle to deliver reliable security answers because context fragments across services and you end up maintaining schemas, scopes, and automation just to keep up.

- Sola gives clear, actionable security answers by combining your live data with AI that has security expertise baked in. It tracks change continuously with dashboards and alerts so you focus on decisions, not wiring.

We summarized everything in a digestible table.

No popup form bulls***, just one click.

LLMs and GPTs: a risky shortcut to security

Sounds pretty simple these days to create something using your own GPT, right? Just spin up a custom agent, feed it a few prompts, and suddenly you’ve got code, answers, and explanations on tap. For a side project or a quick demo it feels magical. When you’re looking for answers around your own security, it’s a trap.

A GPT doesn’t know your cloud services, identity provider roles, or code repositories. Unless you paste that in yourself, it’s operating blind. And even if you do spoon-feed details, the model optimizes for “something that works” rather than “something that’s safe.” That’s why default suggestions often lean toward insecure patterns. It can sound authoritative while actually making things up; amusing when it invents a new HTTP header, less so when it invents a compliance gap you now own.

And the output itself only makes things worse. What you get is vibe coding security at its finest: code that runs, but with missing authorization checks, sloppy validation, over-permissive configs, and secrets hardcoded for convenience.

The point is clear enough: GPTs are great for learning, experimenting, and moving fast. They just aren’t designed to hold the keys to your actual security stack, which is why people start reaching for something more structured. That’s where MCPs enter the picture.

MCP: wiring the model into your stack

What is MCP for security?

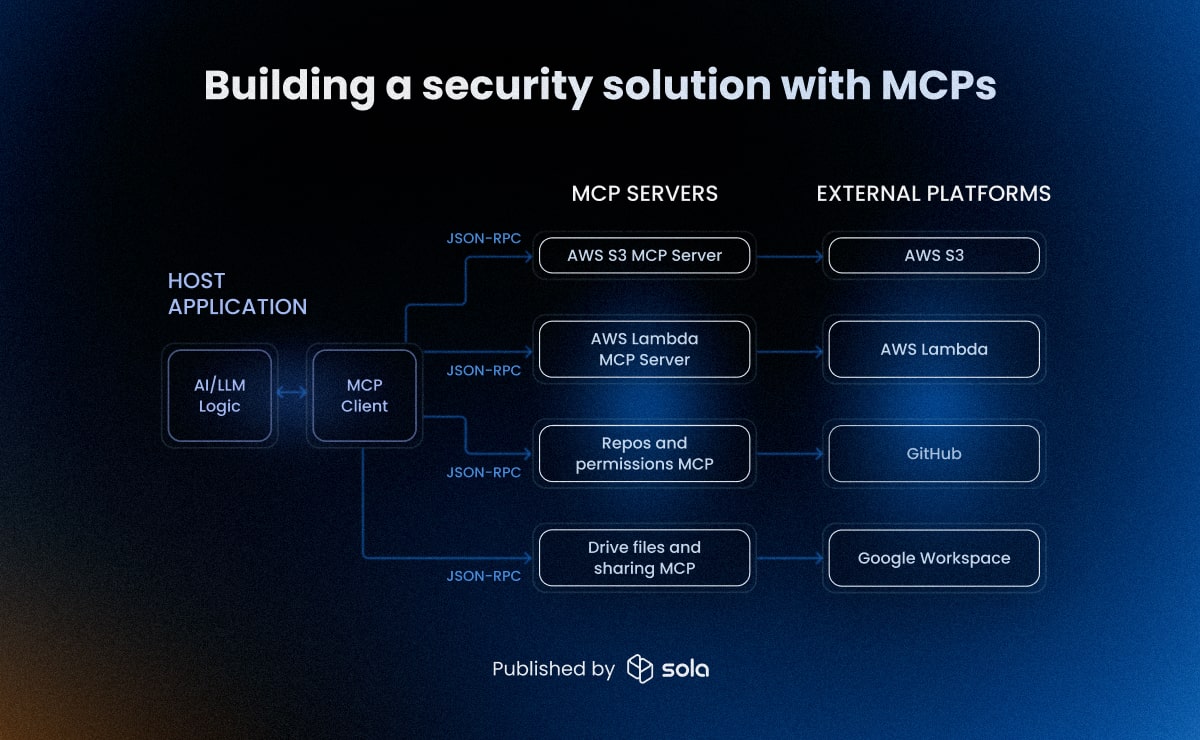

Model Context Protocol lets a model stop guessing and start calling real tools. You stand up an MCP server, expose a clean schema to your internal APIs and SaaS, and the model can fetch context or run bounded actions through those tools. MCP lets a model request specific facts from your systems. In some setups, it can support a limited cybersecurity AI assistant pattern that asks about repos, identity roles, or bucket policies instead of guessing.

MCP can provide repeatable, context-aware workflows. You can codify checks, run them through tools your teams already use, and get answers tied to your environment. It is useful for building modest automations that propose fixes, verify configurations, and return supporting evidence.

If it’s done well, you get faster triage and fewer hallucinations, since the model is reading from structured, relevant sources instead of whatever was in a prompt window.

But then, that’s not exactly a small “if”.

What building your own MCP does not solve

Standing up an MCP server and wiring it into your stack is only the beginning. MCP is plumbing that connects models to tools, not a finished outcome for security answers. It does not invent your threat model. It does not keep monitoring after a single query or scan. If the connected tools lack robust checks, the model has nothing solid to lean on. If your prompts are vague, the model will still aim for something that works rather than something that is safe.

This is where the tradeoff becomes unavoidable. Build it too narrowly and you miss data that matters. Build it too broadly and you dilute the signal. You end up throwing raw tools and APIs at the model without security logic, so answers lose context and slide toward generic. Add ten MCPs and you do not get ten times the clarity. You get competing schemas, overlapping data, and a model that cannot see the forest for the trees. And at scale, the cracks widen: you can’t pass gigabytes of data through MCPs without hitting rate limits, slowing to a crawl, or ending up with messy inputs.

The more complex the setup, the lower the chance of a coherent, security-aware answer unless you also engineer the orchestration, validation, and decision rules. Maintain it loosely and drift erodes its value. Maintain it tightly and you inherit a constant maintenance burden.

The balance never holds still. After getting the basics working with DIY MCPs, many teams look to provider-built connectors for less plumbing, only to find they still need cross-system consistency and ongoing oversight.

Provider MCPs: helpful connectors, limited context

But wait, what about ready-made MCPs? Providers such as AWS, Google, Microsoft and many SaaS companies offer MCP servers. You get maintained, up-to-date APIs and saner access patterns, which speeds up wiring. That is the good part, but the gap is semantics. They give you reliable access to raw APIs, but they do not understand which identities, folders, or roles are important in the context of your own organization.

Fragmentation is the other catch. Each provider limits scope to its own service, sometimes even to sub-services. In AWS, for example, identity and access for S3, Lambda, and RDS all require separate policies and integrations. Meanwhile, some useful surfaces are not exposed or only partially available in MCPs built by vendors. Okta integrations, for instance, can leave gaps in audit logs or delegated session activity, so key authentication events may never reach downstream monitoring.

After signal and context, comes safety. The moment your model can act through MCP, security choices start to matter.

How MCP security can go sideways

In AI in cybersecurity, the protocol connects models to tools; it does not provide guardrails by default. Most MCP security issues come from implementation choices such as broad scopes, tool interfaces that accept unvalidated input, missing identity binding, and untracked drift.

The main MCP security problems:

- Prompt injection can trigger unintended tool calls if the model ingests untrusted content.

- Over-scoped tokens inflate blast radius, while under-scoped tokens miss critical data and give a false sense of safety.

- Weak schemas or vague prompts open the door to misuse and unintended tool chaining.

- Constant drift: APIs change, permissions shift, and schemas age out quietly without warning.

On top of design tradeoffs, there are broader model context protocol security vulnerabilities seen in practice. Unvalidated inputs can allow actions you never intended, and verbose outputs or logs may reveal more than planned. When tool calls are not bound to a user identity, access can bleed across tenants or projects. Adding third-party MCP plugins introduces supply-chain risk.

These risks make MCP security best practices non-negotiable. Use least privilege, read-only defaults, narrow schemas with input validation, strong identity binding, and full logging. Treat the MCP layer as production infrastructure by versioning changes, testing, keeping rollbacks ready, and adding kill switches. That is how you avoid small design flaws snowballing into full-blown vulnerabilities.

MCP shortcomings for security tools

Taken together, these gaps leave MCPs with some clear shortcomings when used as the basis for security tools. Many of them show up first in the quality of answers, before you even get to the security concerns:

- No built-in threat model or org semantics

- Fragmented by service, weak at cross-stack relationships

- Partial visibility when key APIs are not exposed

- You still own stitching, validation, and review to avoid false confidence

- Quality depends on your schemas, scopes, and prompts

And even when those are handled, the operational and security risks remain:

- Continuous monitoring is not provided by default

- Ongoing maintenance to handle API changes and permission drift

- Prompt injection and tool misuse remain risks without strict guardrails

Sola: less wiring, more useful security answers

Teams that try GPTs and then wire up multiple MCPs might gain speed and better context, but they also inherit a heavy upkeep burden. They end up deciding what the model is allowed to see and teaching it how to use those tools.

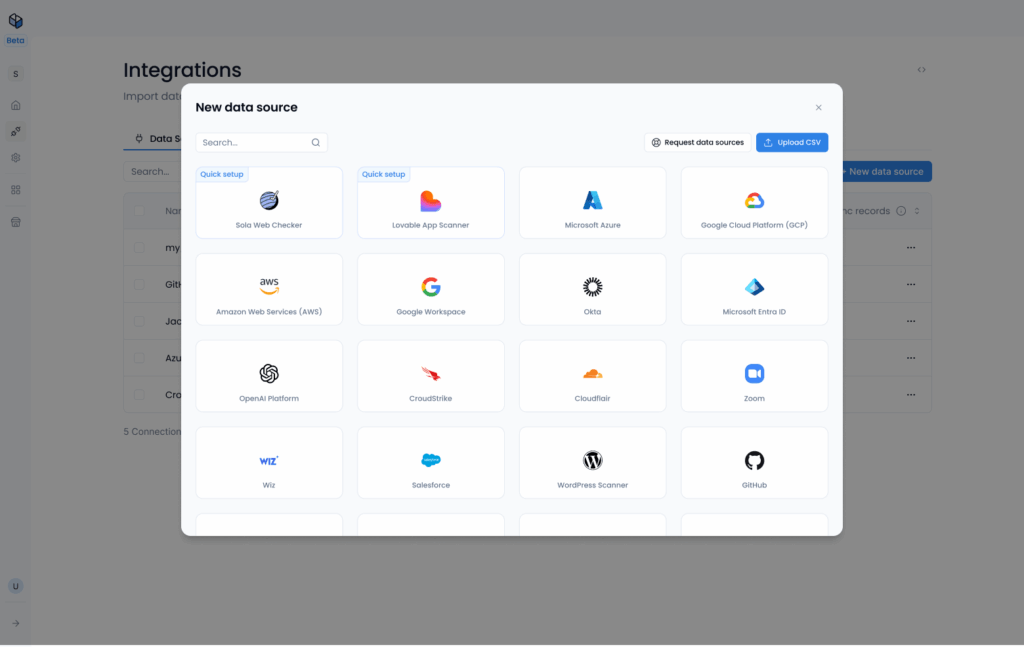

Sola is built to handle that plumbing, so security teams can focus on asking real questions and acting on the answers. It connects to your stack with read-only scopes, standardizes the data it collects into a single model of your environment, and keeps tracking change over time.

What sets Sola apart from GPTs and MCPs comes down to three things: it gives you a full picture instead of scattered APIs, fits into the way code is actually shipped, and stays always-on with a security-first design.

A full security picture through a cybersecurity graph

One of the biggest gaps with MCPs is that they give you access to individual services but not the bigger picture. You can wire up AWS, an identity provider, or Google Workspace, but each one speaks its own language and you still need to stitch the pieces together. That leaves you guessing at how permissions, repos, and buckets actually connect.

Sola adds an additional layer of context. It connects to multiple data sources, then normalizes what it pulls into a single cybersecurity graph. Instead of raw API rows, you get relationships: which IAM role links to which repo, which bucket is exposed, who can actually reach it. And because the data collection is handled by the platform, you don’t run into the same scale problems as with MCPs. Rather than juggling fragmented APIs and worrying about what you missed, you see how risks play out across systems in a connected view.

Higher quality, contextual answers

Having a full picture is one thing. Getting reliable answers out of it is another. GPTs often sound confident but hallucinate when they lack context. MCPs cut down on that by providing structured access, but the outcome still hinges on the quality of your schemas and prompts – with significant challenges to deal with data at scale.

Sola combines real data from your environment with built-in security expertise. That mix produces answers that are not only grounded but also shaped by domain knowledge. Instead of dumping everything on the model and hoping it sorts it out, Sola breaks broader problems into smaller security questions: how to solve an issue, how to validate it, and how it fits into the bigger picture, and then stitches the results back together.

The outcome is higher-quality, contextual answers you can use with confidence, not outputs that need to be second-guessed.

Continuous monitoring

GPTs and MCPs work at query time. You ask a question, they respond, and then they wait for the next input. Users can add workflow automation tools (n8n, Zapier, Airflow), but that adds another layer to maintain and the simplicity quickly fades. The gap remains: security exposures do not announce themselves on a schedule. Configurations change over time, new repos appear, and permissions expand quietly in the background. By the time you ask again, the risk may already be live.

With Sola, you are able to track changes across repos, cloud accounts, and identities as they happen, surfacing new exposures right when they appear. You get ongoing awareness that feeds into your Sola workspace, with results routed into alerts, workflows, and visual dashboards that make risk trends clear over time. Continuous monitoring becomes more than automation: it gives you context you cannot get from GPTs or MCPs out of the box, so security is less about catching up and more about staying aligned with your stack.

Built for security, by default

MCPs leave security decisions to the implementer: scopes, validation, identity binding, and guardrails are all your responsibility. Sola flips that by baking the right boundaries in from the start. Strict trust controls and input/output sanitation reduce the risk of messy data handling turning into messy answers.

Instead of turning setup into a balancing act between too much access and too little context, Sola’s platform ensures the information flowing into your workspace is safe to use. That reduces overhead for teams and keeps the blast radius low if something goes wrong.

Key takeaways for choosing an AI-native solution

Generic GPTs:

- Where they help: quick ideas, refactors, explanations, and throwaway prototypes.

- Where they break: no awareness of your environment, prone to insecure defaults and confident mistakes. Fine for learning, not for running security work.

MCPs:

- What they add: structured access to your tools and data so answers are grounded in real systems.

- What they cost: fragmented by service, lose context at scale, constant upkeep of scopes and schemas, and security risks like drift, over-scoping, and prompt injection. Decent plumbing, but not a finished security outcome.

Sola:

- What you get: purpose-built for AI-driven security. Instead of just wiring an LLM to your stack, Sola securely collects data at scale, then uses its built-in security knowledge to understand relationships and risks in your tech stack.

- What that gives you: a single, contextual view across data sources, where identities, repos, and data are linked so answers reflect your actual state. You stay ahead of drift with continuous monitoring that flags meaningful changes and routes them into your Workspace and workflows.

How to choose between your AI options?

- Use GPTs to explore and draft.

- Use MCPs when you want to wire a model to specific tools and you are ready to own design and upkeep.

- Use Sola when you want the context, the ongoing tracking, and a practical way to ask real security questions without building and maintaining the plumbing yourself.

Chief Information Security Officer, Sola Security

Yoni has spent the past decade leading security engineering at companies like Meta (formerly Facebook) and AppsFlyer, and now brings his sharp eye and steady hand to Sola as CISO. Known for phishing drills so sneaky they make the real attackers take notes, he stays chill even when everyone else is refreshing dashboards and reaching for incident snacks.